An open-source framework, Apache Spark is meant for cluster-computing environments. The programmers are provided an application programming interface that is centred on a Resilient Distributed Dataset (RDD). This data structure is a read-only multi-set, in which the data items are distributed over a cluster of machines. It was initially released on the 30th of May, 2014 and the last stable version had been released on 28th of December, 2016. To know more you can refer to the Apache Spark And Scala Training Classes.

First Appearance of Scala

Scala first appeared on the 20th of January, 2004 and was designed as a result of the flaws that were detected in the Java programming language. Scala was designed as a general purpose programming language that would support functional programming and a strong static type system. It was designed to be compiled to the Java ByteCode, which allows its executable codes to be run on Java virtual machines. The interoperability with Java that is provided by Scala, allows for the accessing of libraries that are written in either language by either language. The many features of Scala include type interface, lazy evaluation, immutability and pattern matching. Its advanced type system supports covariance and contra variance, algebraic data types and more. The last stable release was on the 5th of December, 2016^and the name comprises of two parts – Scalable and Language – which signifies its growth with the demand from users.

Importance of Python as Programming Language

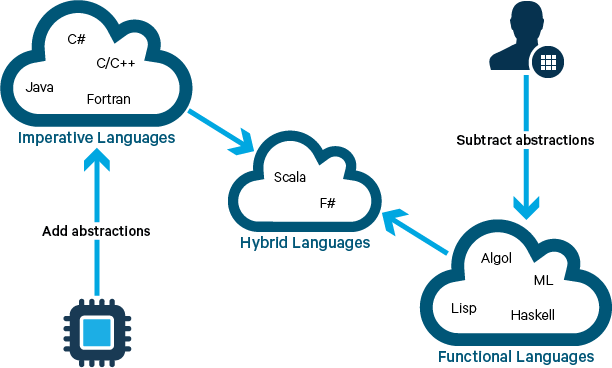

Python is high-level programming language that was designed for general programming purposes. It was released in 20th February, 1991 and the latest stable version was released on the 23rd of December, 2016. It allows the users to make executable codes using fewer lines than C++ or JAVA. The dynamic type system is the supported format and allows for the functioning of multiple programming paradigms. These are like functional programming, object-oriented programming and imperative programming.

Designing of the Spark Framework

The Spark framework was designed using Scala and as such offers better performance. The Spark applications that are written in Scala are much faster in execution than the spark applications that re written using Python. It is native for both Hadoop and Spark as the libraries that are used in both the framework are either written in Scala or Java, which can be accessed by Scala. The only downside of using Scala is the syntax which is out-dated and hard to understand. But with a certain amount of dedicated practice, one can easily master the language.

Easy Understanding of the Concept

Python, on the other hand is much easier to understand as it is much simpler. And with a vast library, it is easier for Python users to do any kind of work on it. Its analytics and machine learning libraries are great and make life much easier for the users. But it is slow and consumes a lot of time, which does not find many takers and is not suited for the Hadoop environment.

Best Way to Learn Scala

One would do well to learn Scala as it is the preferred programming language but it might take some time. For this you can join the Apache Spark And Scala Online Training In Sanfrancisco. The syntax is complicated, but worth the effort spent. Once mastered, both the Hadoop environment and Spark environment become available for use and the programmer can run riot with them. Python may be simpler and easier to understand and work with, but it doesn’t offer the speed that is required for seamless working in Spark.